Just because it is not helpful to your workflow @OldDesigner, does not mean it is not a helpful tool for many for their workflows. As the support on this thread shows, that seems to be the case. I do not think anyone is advocating to replace the current point-and-click design with natural language. In fact, it could be an option in preferences that you can turn on or off. I BELIEVE that is how todoist is if I remember, but either way, that would be a great way to implement it so OF is a better task management tool for ALL.

Actually if you check I was replying to someone else and never intimated you were condescending or anything else but never mind.

I actually used ToDoist for over a year (pro plan), as for speculating it was just that, I did not say I was right merely that it could be a fact.

Really I just put another side, personally I do not think natural language is that important (for me) and detailed why. Sorry for swimming against the collective group think.

You know what they say about assumptions. The assumption that I haven’t used any of those systems is incorrect. My reply + arguments are based on both the idea behind these systems as well as real world usage of them. Instead of making (incorrect) assumptions it is better to ask.

And that’s the other problem: not everyone lives in an English speaking country. The applications you mention have been designed primarily for English speaking countries which makes their natural language input very hard or even impossible to use with any non-English language. They may do natural language input well for English but that doesn’t mean it works as good with other languages. This is extremely important for OmniFocus since it supports many languages. What good would natural language input do if it isn’t available in ones native language?

Languages have a certain amount of complexity to them that not always is good to capture into code and algorithms. Dutch is such a language which is why support for Dutch mostly means they have translated the text and provided a dictionary. Grammar checking and all the other fancy stuff is too difficult so you are not getting these. English is actually one of the easiest languages.

However, the main gist still is that it isn’t very necessary for OmniFocus to support it since it is a standard feature of the operating system it runs on and it isn’t going to solve the speed of data entry at all.

Question back to you: have you tried using the natural language input of these (and other) apps with Siri and also with a language that isn’t English?

A quick word of advise here: be more careful with this subject. The world has far more languages than English with Chinese being used by the most amount of people. It can come across rather arrogant if you only take English into consideration.

It is actually the other way around: you guys have a very different idea of what natural language input is about then what it really is as well as the real problem of what is causing the slow data entry. And apparently you have also missed the point of the part of my reply you are referring to here. The problem is that some people here are oversimplicating things. Implementing something like natural language takes quite a lot of effort because of all the other things you have to take into account. The autocomplete was just an example because it was a rather simple one: the developer of the app can disable autocomplete for the input box. The point was that the developer needs to be aware of the future and program the app to disable it. However, as I have stated earlier, autocomplete could be a very nice addition to have so the developer also has to make the decision if it is a good idea to disable it or not.

Or in other words: things aren’t as easy and simple as you think. There is more than meets the eye.

I think the biggest problem here is that (some of) you are looking at it from a user perspective where I’m looking at it from a software and system engineering perspective (it is my day time job). I know a lot about the technology behind the feature that you are using which is why I’m stepping on the brake here. Some of you guys seem to have entered a discussion that is above their heads. There are a lot of things that they seem unaware of.

You are wrong in this case. Natural language input, speech recognition, etc. all require a lot of computing power. The small devices where they are used on may pack quite a punch when it comes to computing power but it is nowhere near enough. That is way a lot of the stuff you do with Siri, Alexa, Google, etc. will go to their servers and be processed there. The way OmniFocus syncs its database has got nothing to do with that. You simply need to make certain information available to the natural language input service. If you read up on Siri you can find that some stuff it will do locally on the device and other stuff will be send to the servers at Apple. Simply has to do with processing power required. This is going to shift more in the future now that Apple (and others) is designing their SoCs with machine learning, deep learning and neural networks in mind (they made a big deal out of it when introducing the iPhone 8 and X).

I thought I’d add my thoughts here as I am a recent convert to OF from Todoist and, prior to that, Things. I thought that natural language was a massive thing for me and it’s what made me leap to Todoist. But, in fact, natural language doesn’t fit with the GTD methodology at all. It’s great for adding quick reminders and to-dos definitely. But it’s not great for a process that suggests you use an inbox as a dumping ground and then you process that inbox and assign tasks from there. Natural language input means you bypass the inbox entirely. If OF implemented a natural language feature throughout the application, it would (in my mind) cease to become a GTD app and it would become a generic to do/reminders app instead.

Not sure if that adds to the conversation here or not but I hope so. Natural language input isn’t the dealbreaker I thought it was…

I used Todoist and have come to OF and love it precisely because it doesn’t have natural language parsing. Because natural language input across the entire app would change it from a GTD app to a reminders app (IMHO)…

It is sad to see that people are now using the antispam feature to flag posts they disagree with. Can we all act like adults in this discussion and refrain from childish behaviour such as flagging non-spam posts as such?!

I don’t really get the animosity around this topic. OmniFocus is software that gives you many different ways to do things already, if for some users having NLP functionality like Todoist would be more convenient, then why not consider it, perhaps with a toggle that allows users who do not want it to simply disable it.

Personally, I’d appreciate if this was part of OmniFocus - I create and remove repeating tasks fairly regularly based on what I’m doing at work, and writing “Check in with X about Y every Monday” in the quick entry box would be much more convenient to me. I don’t think it’s an all or nothing thing either - there’s plenty of tasks I wouldn’t use this functionality for, but there’s also plenty of tasks that I would. For me, having a feature like this is about ergonomics: it’s another way in which OmniFocus can adapt to the way I prefer to work, as opposed to the other way around.

Text parsing would be great. I’ve got a work system which automatically e-mails me individual tasks. Those e-mails always follow a templated format. They list the task name, the project, and the due date in the body of the e-mail. If OmniFocus had text parsing, I could forward those e-mails directly into Omnifocus and have Omnifocus do the work of assigning due date & project name based on text parsing. As it is, I can forward the e-mails (or use mail extension) to put them in Omnifocus, but I still need to enter due dates & project names by hand. It’s a silly amount of duplicated effort when that information is already in the e-mail and is clearly labeled with natural language “It’s due on Nov 21” or “Related to: Project X.”

I’d be interested in trying this, but have found Workflow (Shortcuts) confusing. Any chance you could point me in the right direction about how to pursue this, @phukus?

Need natural language, complete quick add please! Omnifocus is the best, but need more fast easy to add!

The Fantastical model is brilliant. I love how it shows you an animation of the parsing. For those who have never seen this, it opens a window from the menu bar, displays the text to be parsed (e.g., Lunch with Ted at noon next Friday at French Bistro), then animates filling out the calendar form with the components.

Lunch with Ted → subject of calendar entry

Next Friday → pre-populates the date picker

at French Bistro → opens up the place search with this phrase

Sometimes there is nothing else to do but confirm. Sometimes tiny tweaks. In all cases, it saves time and drudgery. The animation helps you learn how the parser works and makes it easy to get better at typing exactly what you want.

It’s fast and delightful.

Agree, +1 for natural language input. I’m so used to being able to do this on Fantastical, and I keep entering this by default in OF too, and then stopping myself and changing it or using the date picker, which feels really kludgy in comparison.

OF is a great tool and this might be seen as pedantic, but given how common this is elsewhere, I hope the team treats it as an entirely reasonable feature request !

Is there not a privacy issue here? Natural language processing relies on external servers, and therefore private data being transmitted across the internet, whereas OF makes a big thing (rightly) about the encryption and security aspect of the app and the data.Natural Language imput would negate that surely?

The two things seem, at least at the current state of tech to be mutually incompatible. Personally for me NL is not a big thing, I actually like the precision needed to add the task correctly in OF, it makes me think if I have it right or even need it at all.

Not sure where you got that idea. Todoist does this on-device - it works on my iphone in airplane mode, my laptop while disconnected from any network, etc. It absolutely does not do this on an external server.

That may have been true a loooong time (more than a decade?) ago, but it certainly hasn’t been for some time.

This is something I don’t understand… it’s exactly as precise either way.

“Take out trash on wed 6p #chores” has all of the same info as clicking in 3 separate fields/using the date picker, etc, but I get to do it all in one ‘sentence’ without lifting my hands from the keyboard.

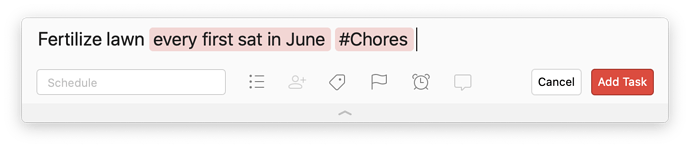

and if you want something repeating, you save a TON of click/select:

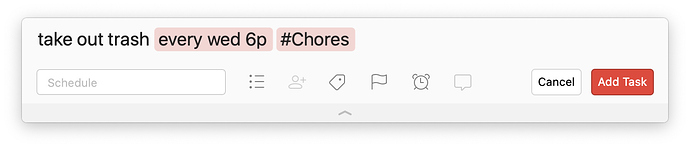

“take out the trash every wed at 6p #chores” does everything, precisely, in one line. Schedules for Wednesday at 6p, sets the repeat for every week, and puts it in the Chores project.

I’m not asking to remove the old enter-everything-in-a-separate-field method, all I’m asking for is to parse the “name field” for the other info. Simple, preference check box to enable/disable.

And no, you don’t need a cloud sever to do any of this

Precision is not the correct word here but I think I understand what is meant. Your example is actually unknowingly describing the problem what is meant. You have 1 field with information of 3 (or even more) fields. In other words, you are not seeing all of the information that can be entered. For some that can cause items to be created where half the information has been forgotten (which contradicts what OmniFocus is meant to do). Having some visual keys (read: see all the fields) can aid in that. This is the reason why many are using sticky notes and putting them on the front of their monitor, right where they can see them.

NLP does not say anything about it being done on-device or somewhere in the cloud. It can be either one or both (as what Apple does with Siri). It really depends on how the developer has chosen to implement it. I can imagine that they’d use an external service so they don’t have to go reinvent the wheel. It simply reduces their workload (especially when they want to support multiple languages). Only way to find things like this out is to contact the developer (if they are offering the product/service in the EU they have to mention it due to the GDPR legislation).

If you want to know where @TheOldDesigner has gotten the idea from then take a look at what Amazon, Google and Microsoft offer as services to developers. Microsofts Visual Studio Code is a good example of an application that takes use of such a service from Microsoft. It uses NLP for searching through its settings and that is a online-only service Microsoft offers. In other words, searching in the settings of Visual Studio Code will transmit all of what you entered to Microsofts servers in the US. They do provide an option to turn this off.

The more problematic implementations are the ones that use both ways (on-device and cloud) with one being the primary and the other being the fallback. It then may look like it is working offline but in reality does not because it is simply falling back on on-device.

The danger with what I would call “off hand data entry” is that NL reduces somewhat the need to actually be “with” that task while you write it. Is it correctly formatted, is it relevant, does it move the project forward, could I break it down/word it better? For me anyway NL takes that away somewhat and risks my system becoming corrupted.

I can see with appointments where it’s more clear cut, you either have or do not have an appointment/meeting. Tasks are very different.

But that’s just me.

Why would you only see 1 field? This is the frustrating part to me… there are several examples of how this has been implemented and yet so many here shoot it down without actually taking a look. Here is the example from Todist:

Nothing is hidden here. You see every field that can be entered, and as you type you see exactly what the parser has picked up from the one-line entry.

Here’s another one that would take many clicks and several windows to do in OF:

I get that… which is why, just like with many other options, the user can use it or not. For some it works, for some it doesn’t. No one is ever forced to use it - Todoist also has a setting to turn it off.

Many productivity apps have implemented this - Fantastical, Todoist do it best… but Things, Apple Reminders, Google, Remember the Milk, etc all have parsing of the “input field” to auto-fill the task info. It’s become almost a “standard” feature in the industry at this point.

All I want is the option.

there are several examples of how this has been implemented and yet so many here shoot it down without actually taking a look.

I find that super weird, also. It’s almost like people take pride in doing it the hard way, like it’s a right of passage. Or maybe it’s Todoist envy. Natural language input as an alternative you can use by choice is so obviously badass once you’ve used it.

Sigh.